I have always had a passion for creating music and have been making rap music with my friends for many years. While I was in school and studying machine learning I thought it would be interesting and fun to try to train an AI to produce rap beats.

MIDI Files

Table of voice messages used in MIDI files and their contents

The Musical Instrument Digital Interface (MIDI) protocol enables electronic musical devices such as synthesizers to communicate with computers. MIDI files can also store a digital representation of entire songs including all the instruments, key and tempo changes, and even lyrics. Importantly for machine learning, by analyzing the timing of when notes are played the notes played can be discretized into arrays representing notes how we are used to seeing them on sheet music with each index of the array being a beat of a song whether it be a quarter, eighth, sixteenth, etc. note so that these arrays can be used as input to a neural network.

Chord Identification

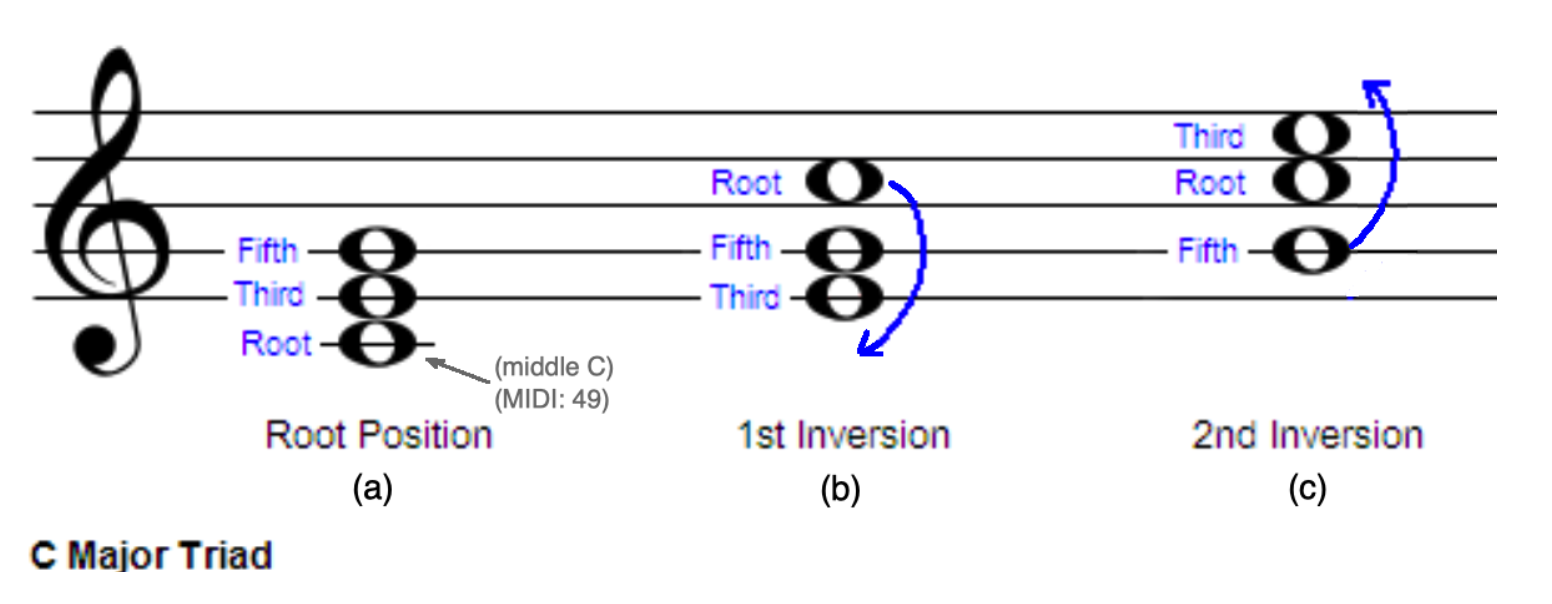

Classification of standard, first inversion, and second inversion chords on a treble clef.

Western songs are generally made up by a series of chords which tend to define its tone and style. Therefore, it is important that at any moment in a song, we can look at the notes currently being played and determine which chord set to which the notes belong. To do this, I needed to learn music theory and derive an algorithm for identifying chords. MIDI represents a note as an integer from 0 to 127 where middle C on a piano is represented by the number 60. Each integer is a half step and based on the number of half steps between notes, the chord can be identified.

Rhythm Identification

Similar to the array of chords with indices represented by beats, rhythm must also be represented so that patterns in rhythm can be detected. If a note is played at a particular beat, that index in the array is represented as a 1 and a 0 if not. This is necessary to keep separate from the array of chords because that array needs to show what chord is being played at any beat to allow for sustained chords and not necessarily just when the chord was first played.

Experiments

Two learning agents were used for this experiment: a 4 layer (512, 1024, 256, 128, respectively) neural network with a ReLU activator function, and a Long Short-Term Memory (LSTM) network with 3 hidden layers of size 64 that processed 8 beats at a time.

Both networks were trained for 50 epochs on a set of 20 examples of beats with MIDI files for the melody, kick drum, and hi-hats all 4 measures in length. These MIDI files were processed into arrays of length 64 with each index representing a sixteenth note using the methods described above. These agents were then given 13 test melody MIDI files and generated MIDI files for the kick drum and hi-hats to accompany the melody.

Results

The neural network and LSTM had their own strengths and weaknesses with contrasting styles. he neural network would produce rhythms which were erratic and unpredictable, though interesting; whereas the LSTM created rhythms which were extremely structured and repeated the same notes every quarter note on average. A compilation of songs are available to listen on my soundcloud.